11 Aug 2017

Transfer to Storages sample

Transfer from Autodesk (BIM 360, Fusion 360) to other Storages (Dropbox, Box, Onedrive, Google Drive, Egnyte)

In the past, we had separate samples to integrate Autodesk (BIM 360 Team/Docs, Fusion 360) files with another storage provides. We ended up with multiple samples doing the same task and with duplicated code, including the limitations on server consumptions when transferring big files.

So we decided to redesign the samples into a single source code framework, reusable, with support for transferring multiple files and increase the size limit (regardless the server capacity). Before explaining how it works, here are the links:

Go ahead and try them!

Now let's go into details.

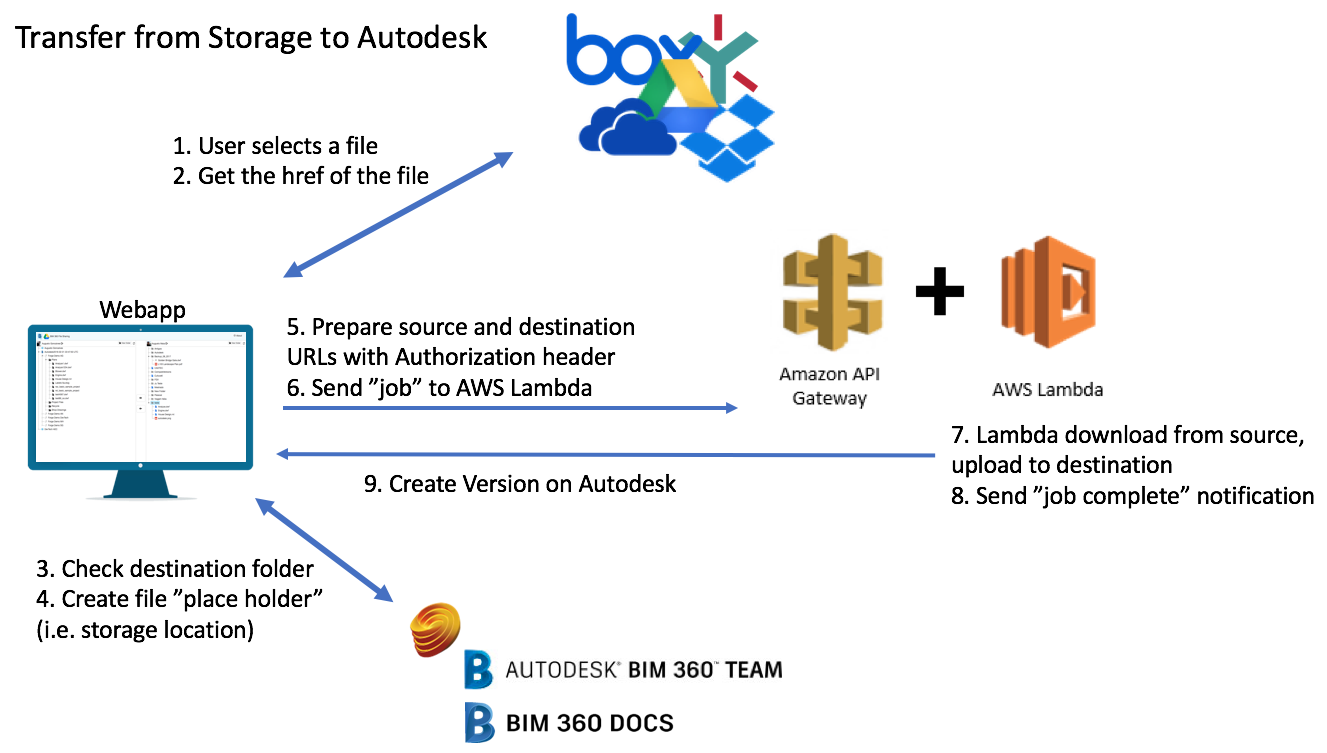

To support this we'll need extra computing power. But don't want a huge server that will be idle most of the time. Amazon AWS Lambda is an interesting solution for that, as described in this previous blog post. As mentioned, AWS Lambda can automatically and instantly scale only when needed, so the main webapp can run on a relatively small instance (cheaper) and only access extra power on-demand.

In this scenario, the webapp will perform all lightweight CRU(D) operations, like create storage location, folders, check if files exist, etc., then delegate to AWS Lambda. The following schematic demonstrates how to transfer from Autodesk to storage:

And to transfer from storage to Autodesk we do basically the same:

Note that AWS Lambda has some peculiarities:

- Server-less: there is no actual server with our code, it's instantiated on-the-fly on-demand, meaning there is a delay on cold-start. To reduce that, all 5 apps are sharing the same Lambda, increasing the chance of it being already active (AWS keeps the Lambda up for a while after it finishes the task).

- Need a gateway endpoint: as there is no server, there is no URL address to it. AWS Gateway implements the access control and provides the URL we need. It's important to have access control, we don't want others to use our powerful transfer engine.

Source code

Everything is on Github, take a look at bim360appstore-data.management-nodejs-transfer.storage repo. It includes steps to deploy it on Heroku (simpler) and AWS Lambda. This previous blog post explains more about AWS Lambda setup. Note you'll need one deploy for each storage integration, the STORAGE_NAME environment variables define it.

Having the same common source code allows us to fix issues once, especially those not specific to a storage, and deploy to all 5 apps at once. With Heroku auto-deploy from Github, we can quickly test our changes live. When ready, deploy to .autodesk.io live version.

Comments? Suggestions? Send it here.